Side Project

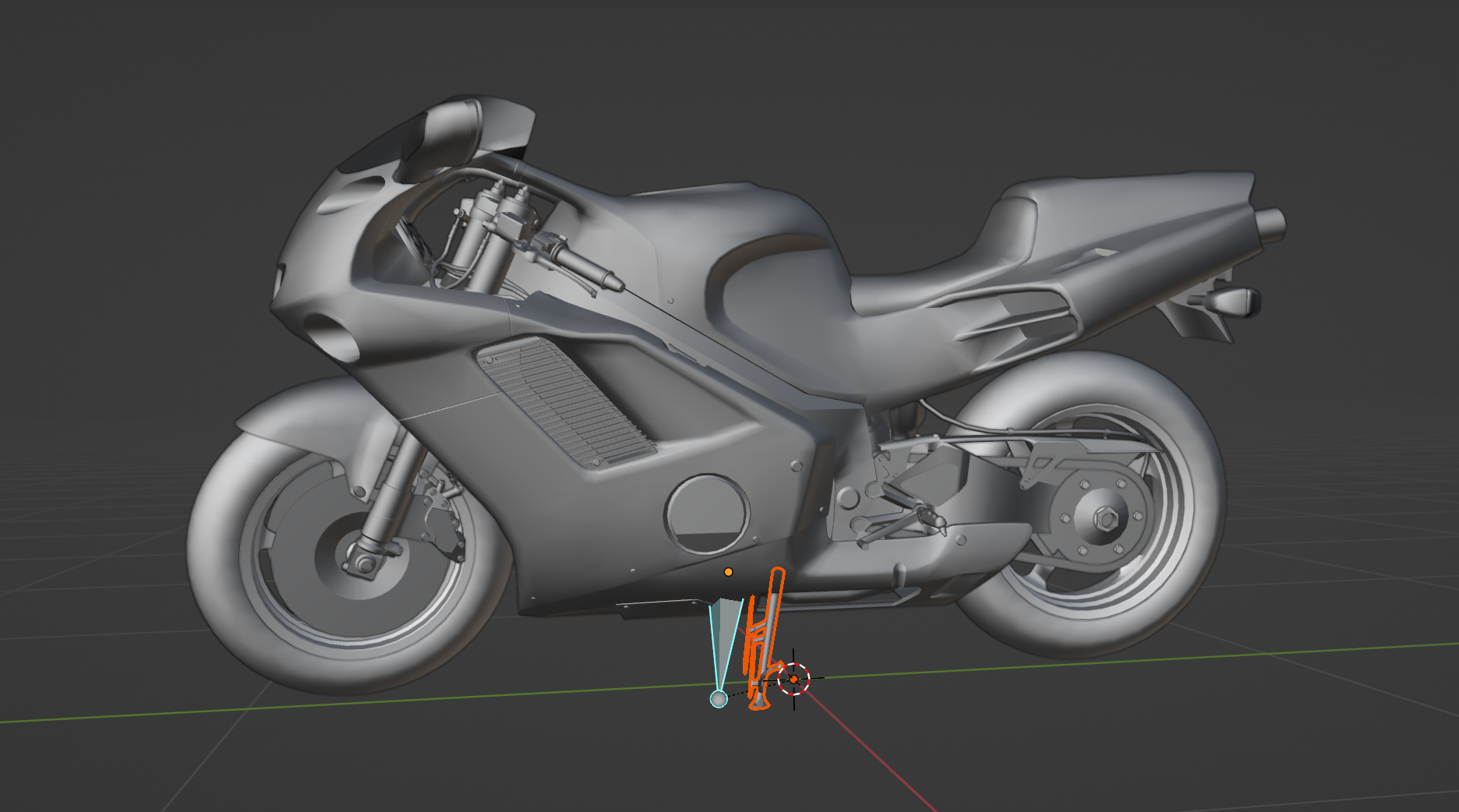

My friend Alexa is a SAG-AFTRA actor and has been dying to do motion capture, and so we decided to record a small video for fun about a motorcyclist that is following the tracks of a fellow rider. We chose for the character to be a motorcyclist so we wouldn't have to do facial mocap and to keep it simple as to not have to use the Manus gloves. We shot this without knowing what the proportions would be for the bike, so I keyframed the control rig on animation layers to situate their avatar better. Right now I'm editing the clipping of the hands, and need to import the flashlight and pin it to the rigidbody on their right hand. I also need to swap models once the motorcyclist removes their helmet, and attach the helmet to their left hand. There is a little bit of jiggling in the animation, which I think comes from the amount of rigidbodies that overlap with the skeleton, so I'm also going to cleanup the animation data.

Final Project

Our first reservation for our final, Alexandra performed as the singer in the band, while AJ gave direction and I engineered. We used part of the Optitrack calibration wand as the microphone rigidbody, and adjusted it so that the hand markers would be farther from the microphone's, as the system was getting confused.

AJ and Alexandra performed together on our second reservation slot, and this time Alexandra borrowed the guitar from the Micro Stuido so that we could have a guitar rigid body prop. I engineered and gave direction on what looked good in the Optritrack system, since it had a harder time understanding performers lying on the ground, and we had props with markers near the hands/covering the front of the body.

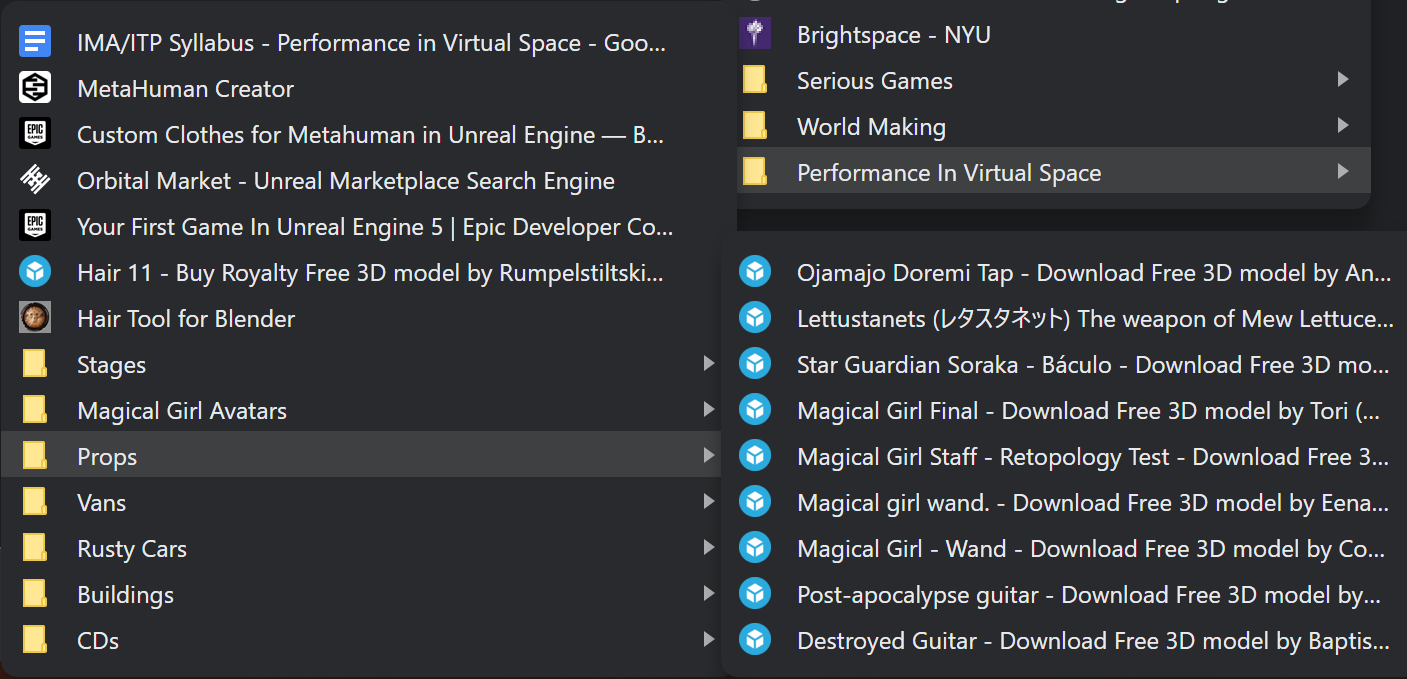

Since the avatars, props, and rooftop stage scene are done, my main job was to create the beginning scene where the player finds the band's CD in the apocalypse. I started with the Rural Australia set from the Unreal Marketplace as a base, and deleted everything except for the arid ground, which felt perfect for showing apocalyptic desolation while still having a road. I used the GoodSky plugin to make an eerie mix of red and purple for the scene, and added in light wind ambience to highlight the emptiness. I found an artist that does scans of destroyed buildings and added in a bunch of different meshes, arranging them so that the player would not be able to easily see the boundaries of the level, and shrunk the blocking volume down so the player's exploration would be limited. I found another artist that does scans of destroyed cars and added those in for flavor, as well as a rusty van that was open enough to place props inside, such as the magical girl wand and guitar, which I gave a rust texture. The the CD and CD player were made triggers to send the player to the rooftop scene, so I built a blueprint that would tell the player to press E to interact with the CD. I placed lights on the van's brake lights and inside of the van to draw the player's eyes there, and made the CD bright purple/interactable.

The particle effects for the meteors and the crowd silhouette did not get made, the motion data still has some problems, and the ending scene back at the apocalypse level is missing, but the two scenes transition to each other.

Week 5: Utilizing Props and Triggers

I found many different props that would work for our project, such as a magical girl wand to work as the microphone, as well as a baby blue guitar that would work perfectly with our pastel avatar, which we'll use for the concert scene. I also bookmarked every asset that was either interesting or used in the project, so that when it's finished we can give credit to the 3D modelers.

I also made a bunch of avatars for the crowd in the rooftop scene, but we decided to go with the silhouette crowd models instead. Alexandra edited the pastel avatar to be more lavender and added a heart onto the skirt.

Week 4: Creating Sets and Filming

I thought that having the performance take place outside would allow more options for representing the world ending, and had the idea to have the band members performing on a rooftop while meteors start falling from the sky. I found a stage on sketchfab that reminded me of a rosebud, which felt perfect for magical girls. I separated the cloth part of the mesh from the metal bars in Blender, then brought it into Unreal. I grabbed the Matrix City Sample from the Unreal marketplace and found that all of the buildings were a single static mesh, which was perfect for our project. I flew around the level a lot looking for the perfect roof in the middle that would be low enough that the player would be unable to see the boundaries of the city, but high enough to see the sky, and used the default 3rd person controller to look at how the level would look to the player.

I created these two magical girls in ReadyPlayerMe, as these were the best outfits available for the aesthetic we were going for. These models ended up being our final avatars, though we later removed the glasses and swapped the hair on the right.

AJ and Alexandra then placed the avatars onto the stage in Unreal, added purple lights onto the stage, and fixed the missing collisions. Since I've never used Unreal before I was confused on why the meshes did not come with collisions on their own.

Week 3: Performing as Avatars

We got into groups to create a final project, and so I booked three reservation times in the Optitrack rooms to shoot our final project. This week AJ, Ranna, Alexandra and I worked in the mocap room together for the first time. We decided on creating a short narrative piece about a magical girl band in the apocalypse who's tour van breaks down, and so we thought it would be good practice to capture the band members walking on stage at their last concert.

AJ and I did the motion capture performance, Alexandra directed/engineered, and Ranna took over engineering when she arrived.

Our group had a lot of trouble with the ReadyPlayerMe avatars, so AJ and I troubleshooted in Motionbuilder a lot. We realized as well that we needed to convert our TAK files to FBX in Motive.

Once Ranna and AJ got the files converted I edited the takes in Motionbuilder and attached them to AJ's ReadyPlayerMe avatar, dropping the animation into Unreal. The textures on the ReadyPlayerMe avatars have trouble connecting in Unreal, but we later got them working.

Week 2: Creating Rigged Avatars

The Metahuman creator really intrigued me, so I treated it like how I play the Sims and tried to create a cast of characters. I made a character from an action game I coded in another class and tried to get the Metahuman to match my drawing as close as possible. Certain sliders are very limited, especially in the nose controls, hairstyles, and facial proportions. While there are many options for skin texture to choose from, there are hardly any cosmetics or scars, which seem not too difficult to add. It's interesting because people often complain about the limitation in the base game of the Sims, which is why the custom content community is so large/active, but this is even more limiting. I think the most robust character creator I've used may be Dragon Age:Inquisition or Fallout 4.

For my other class in Unity, I'm making a section of a story I've been outlining for a while, so for fun I also tried to make the characters in that story: a brother, a sister, and the brother grown up. Trying to make multiple people really made it apparent how bad the hair options are, the hair to pick from reminded me of when I've tried to make characters in video games that are... not exactly marketed towards women, where the "feminine" hair options are always confounding. I know that simulating the long hair physics is a limitation, but there are just weird choices in what they ended up deciding was good enough, like the messiness of the baby hairs on the long straight options, the messiness overall in the long hair options to be honest, and the random Rey Skywalker space buns that don't look nearly as good as hers.

There are a disproportionate amount of options for short straight hair, but only a few terrible options for long hair, and not a single long textured style. Every video game it seems there's only ever three options for textured hair: short coil, afro, and cornrows, and in the Metahuman creator they followed that trend. During Covid my friend started working on the open source afro library, which is filled with both everyday and fantastical hair, but in the Metahuman creator only straight hair gets uncommon hairstyles, like mohawk or baby bangs with braided buns. Hair is important to a lot of people so its strange how there are simply so few hairstyles in general in the creator.

I then tried to make myself as accurately as I could within the creator's limitations, and so I looked at pictures of my face in different angles to try to capture my facial structure and skin texture. It's really uncanny looking at it because it looks like me in certain angles/features like the eyes, but it doesn't quite match my likeness in the nose especially. I do struggle capturing what I look like when it comes to drawing, so that could be a factor at play. It's interesting because like how the reading mentioned, people tend to make an idealized version of themselves when making avatars, so when I've made characters that are "me" in games they're usually either something similar enough to me in a limited system like Skyrim or just going for vibes.

My Animal Crossing character looks more like me, but my Elden Ring character also feels like it represents me even though we look nothing alike. I noticed that I either make myself more feminine or masculine in my avatars, and that while I tend to pick green colored hair because it's been that color for sixish years now, I also pick other colors because I've dyed my hair so many different ways.

With the Metahuman, I was able to import it into Unreal and have it ready to go for adding motion data from Motionbuilder.

Week 1: Storyboards for Character-Specific Movement

I decided to storyboard a character picking up an animal they hunted, while holding a baby in a sling. This character is overprotective of their child and so keeps them in a sling on their front instead of back, and doesn’t even consider setting them down to make picking up the animal an easier task. This indicates that this character is the only responsible party of this baby, or that they do not trust anyone else with their child. Picking up the heavy animal while being careful not to jostle the baby/bump it with the animal is a struggle, and so the movement is very awkward for them.

I considered having the baby situated more squarely on the parent’s chest instead of towards the side, and then having the animal slung over the character’s shoulders/back, but picking up the animal would have jostled the baby more. I also considered having the baby in the front and having the character use some sort of rope or sled to drag the animal behind them, but felt that picking the animal up was a stronger choice. For capturing the motion, I was considering whether a bag of water would work for the animal.